TL;DR version

I did some investigation into the Bing & Google spat. Once the Bing Toolbar is installed, IE 8 sends Microsoft a summary of every page URL that is requested. I found no evidence the toolbar singles out Google, or even search engines. I found no evidence of directly copying search results.

The results seem to indicate that Bing treats Google's search result pages the same as any other URL on the world wide web.

The rest, it would seem, is all spin.

Background

Over the past 48 hours Google & Bing have been having a public spat about search results. The initial salvoes led to Google's official blog post "Microsoft’s Bing uses Google search results—and denies it", then a counter-post from Microsoft "Setting the record straight". These two official posts have been accompanied throughout by other parties posting comment, criticism and also poking fun.

Spinny Spin Spin Spin

Two things have struck me as bad in this debate. First off, the insane levels of spin. Google's original blog post minced no words about implying the actions were deliberately targeted, and went directly on the attack with statements like "some Bing results increasingly look like an incomplete, stale version of Google results—a cheap imitation."

Bing's counterspin was just as shocking, associating Google's tactics with "click fraud" and casting the focus across to other Bing features that have (allegedly) shown up in Google.

Google seem to have struck first in the spin war. Their official blog post painted Google as the innovator, and Bing as the unquestioned copycat. This left little room for either party to save face, but it left Google with a massive PR upper hand.

Science?

The other problem I see is with Google's methodology. The blog post explains how they concocted a series of experiments to "determine whether Microsoft was really using Google’s search results in Bing’s ranking."

The experiments produced results, but not in any kind of scientifically conclusive manner. There were no scientific controls, and the conclusions were presented as simply supporting Google's argument, with no other plausible explanation ceded.

My Investigation

I decided to run my own investigation. Armed with nothing but a humid Australian summer evening, Wireshark, and beer. I also have a Windows XP VM that I keep around just to run Adobe Lightroom.

First off, I installed a vanilla IE 8 and started my packet capture.

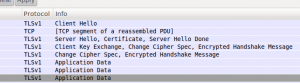

Straight away, I saw TLS encrypted connections to a Microsoft IP. Caught red handed?

A quick session with the excellent freeware API Monitor let me read the unencrypted data.

Seems that this is the IE anti-phishing filter. A SOAP request is sent once for each domain the browser visits. The responses appear to be cached so the same domain is not requested twice.

For example, here are the only two SOAP requests sent to Microsoft when I navigated to google.com.au, searched for "green fig jam", and clicked the third link. Excuse the XML.

You'll notice the only two URLs sent were the article I clicked on and some tracking URL on that same site. The search URL on http://google.com.au was not not even looked up, because google.com.au had previously been cached as a safe domain.

The anti-phishing service seems to be innocuous. It does not provide enough information to enable any "copying of search results".

Bing Toolbar

I couldn't find any other data sent by plain old IE 8 (including Suggested Sites.) Time to install the Bing Toolbar and give away some more of my precious privacy:

Immediately the packet captures started getting interesting:

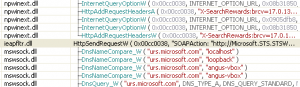

Unencrypted HTTP GETs to g.ceipmsn.com, one for every page that the browser loaded.

Here's the full encoded HTTP/1.1 session, as captured by Wireshark. Below are the individual GETs sent when I opened a new window and visiting my website, projectgus.com.

This first GET seems to be an initial identifier sent when the browser opens (nothing is sent back in the response.) Then there is a GET when I type projectgus.com on the address bar. Then one for the Telstra GPL Violation link on the front page. Then finally one when I click through to the GPL-Violations FAQ.

Among the interesting pieces of information that are sent:

- A unique identifier for at least my Windows login session, maybe my computer. I never saw it change. (MI=0E6B38A7645C4121BC051BDBF57482AF-1)

- Version numbers such as my VM's OS version (XP Pro), and some others. (OS=5.1.2600)

- Timestamp and timezone. (ts20110203221808108|tz-600)

- The URL loaded. (http://gpl-violations.org/faq/vendor-faq.html)

- The link text clicked on to get to that URL. (atvendor%20FAQ)

There are also some other interesting fields that appear in some other captures, such as the original URL if a redirect occurs. There doesn't seem to be any page content sent, just URLs and sometimes link text.

What about Google?

Here's another full session, recorded when visiting google.com.au to search for Microsoft Seaport, then clicking on the third search result.

Here is some of the pertinent data sent to Microsoft from that session:

http://www.google.com.au/search?hl=en&source=hp&biw=1676&bih=813&q=microsoft+seaport&aq=f...http://www.google.com.au/url?sa=t&source=web&cd=10&ved=0CFgQFjAJ&url=http%3A%2F%2Fsmartnetadmin.blogspot.com%2F2010%2F02%2Fremove-microsoft-seaport-search.html&rct=j&q=microsoft%20seaport&ei=ho1KTbQXjMlxw7vFtws...http://smartnetadmin.blogspot.com/2010/02/remove-microsoft-seaport-search.html

... these are just some of the 12 URLs requested by the browser as it went through these motions. Ironically, most of the other page loads seem to be Google, Blogspot & Doubleclick all recording tracking data.

Interestingly, the 'at' field showing the link text doesn't appear when tracking Google activity. I don't know if this is IE8 honouring robots.txt, or some other factor.

It's clear to see that if Bing mines this data then it could associate Google search queries with the resulting pages. This is simply because the query is encoded in google's URLs, and then the result page URL follows. In this way, though, it does not look like Google is being treated any differently from any other web site with human-friendly URLs.

In particular, Google's experiment (injecting unique strings into special searches) could surely have caused these associations to occur, from the data seen here.

It's worth noting that, on the face of it, mining tracking data in this way could provide better search results - by mining where users really do click all over the web, and which pages they bounce through before finding useful content.

Of course, there is nothing in this investigation to suggest that Bing doesn't have some special "google filter" running on their server side to extract Google clicks from the rest of the tracking data. However, there's also absolutely no evidence to suggest that this does happen. Occam's Razor would seem to apply.

So who done bad, again?

The behaviour I've seen explains Google's experiments, but does not support the accusation that Bing set out to copy Google.

Bing Toolbar is tracking user clicks and Bing could use the result to improve search results. I don't personally see any great distinction between this behaviour and Google's many tracking, indexing and scraping endeavours which they use to improve their own search results.

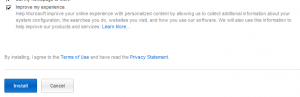

While I personally dislike the privacy implications, Bing Toolbar is pretty upfront about it when it gets installed (unlike much web page user tracking.) The fact that the tracking is plain HTTP not HTTPS, with the content in plaintext, would seem to indicate that they weren't seeking to hide anything.

Ultimately, the worrying question for me is whether web search has become so stagnant, and so generic, that this kind of name-calling and spin-doctoring may now be the only way to carve out a brand?

Thoughts on “Bing & Google - Finding some facts”